Deep learning has transformed artificial intelligence that enabling machines to learn and make complex decisions like neural networks. Neural networks are computer models that work like the human brain. In this blog, we’ll take a closer look at neural networks and understand them in an easy and straightforward manner.

What is a Neural Network?

Neural networks are computational models consisting of interconnected units called neurons, which process and transmit information. They are composed of layers – an input layer that the one or more hidden layers on an output layer. The input layer receives data, and each subsequent layer performs calculations on the received data. Neurons within a layer are connected through weighted connections, and each connection’s weight determines its importance in the information flow. The output layer produces the final predictions based on the processed data.

How Neural Networks Work?

- Input Layer

The neural network begins with an input layer that receives the initial data. Each input neuron represents a feature or attribute of the input data. - Weighted Connections

Each input neuron is connected to the neurons in the next layer through weighted connections. These weights determine the strength or importance of the connection. The weights are initially assigned randomly and are updated during the training process. - Activation Function

Each neuron in the hidden layers and the output layer receives inputs from the previous layer’s neurons. It calculates the weighted sum of these inputs and applies an activation function to introduce non-linearity into the network. The activation function determines whether the neuron will be activated and how much information it will pass to the next layer. - Forward Propagation

During forward propagation, the information moves through the network from the input layer to the output layer. Each neuron’s output becomes the input for the next layer’s neurons and this goes on until the output layer gives the final predictions or outputs. - Loss Function

The output layer’s predictions are compared to the desired or target values using a loss function. The loss function quantifies the difference between the predicted and actual values, providing a measure of the network’s performance. - Backpropagation

The training process involves adjusting the weights in the network to minimize the loss. Backpropagation is used to calculate the gradients of the loss function with respect to the network’s weights. The gradients indicate the direction and magnitude of weight updates required to reduce the loss. - Optimization

Optimization algorithms like stochastic gradient descent are used to update the weights based on the calculated gradients. These algorithms iteratively adjust the weights in the network moving them in the direction that minimizes the loss. - Training Iterations

The training process involves multiple iterations or epochs, where the forward propagation and backpropagation steps are repeated using different training samples. The network gradually learns to make more accurate predictions by adjusting its weights based on the training data. - Testing and Inference

Once the neural network is trained that it can be used for testing and inference. New data is fed into the network and forward propagation is performed to obtain predictions or outputs.

Advantages of Neural Networks

- Non-linearity

Neural networks can learn non-linear relationships between inputs and outputs that will enable them to model complex patterns and make accurate predictions. - Adaptability

Neural networks can adapt and learn from new data that will be allowing them to improve their performance over time. - Parallel Processing

Neural networks can perform computations in parallel that they making them suitable for tasks that require high computational efficiency. - Feature Extraction

Neural networks can automatically learn relevant features from raw data, reducing the need for manual feature engineering.

Limitations of Neural Networks

- Training Complexity

Training neural networks can be computationally intensive and time-consuming that especially for large and deep networks. - Overfitting

Neural networks are prone to overfitting where the model performs well on training data but fails to generalize to unseen data. - Need for Large Datasets

Neural networks require large datasets to generalize well and learn representative patterns, which may pose challenges in domains with limited data availability.

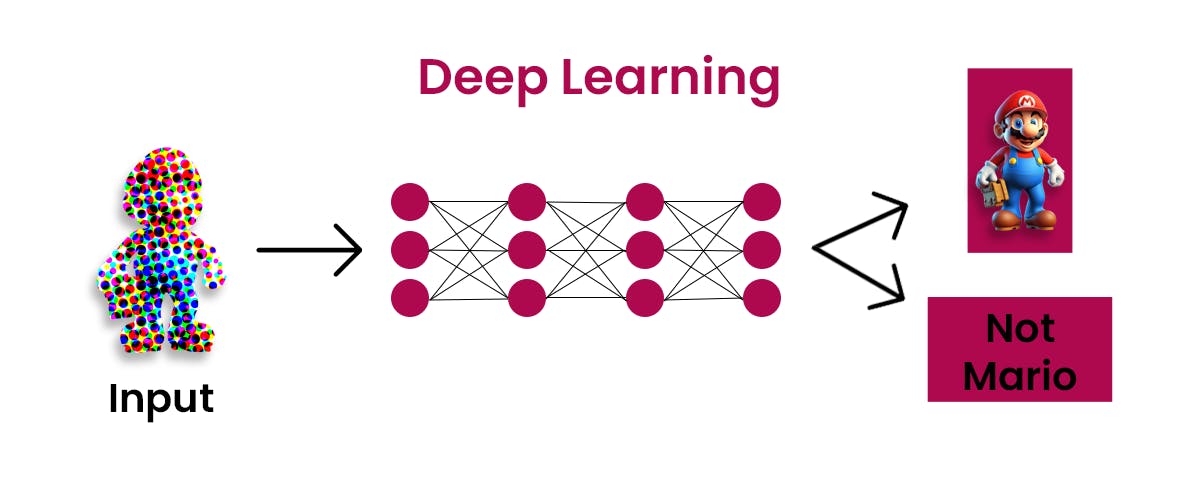

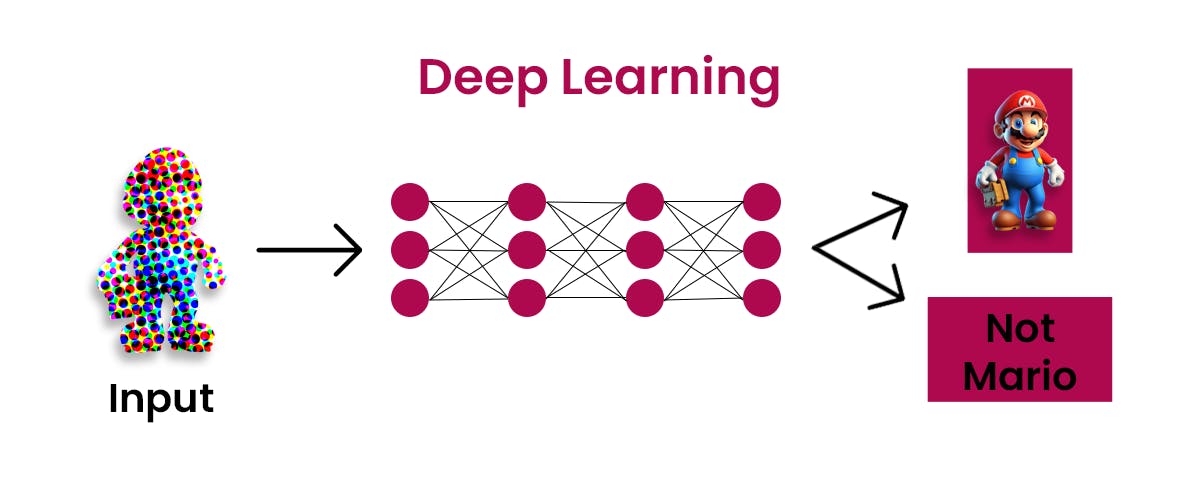

What is Deep Learning?

Deep learning is like an expansion of neural networks where we use deep neural networks with multiple hidden layers. DNNs enable the automatic learning of hierarchical representations and abstract features from raw data, leading to improved performance on complex tasks.

How Deep Learning Works?

Deep learning is a subset of machine learning that utilizes artificial neural networks with multiple hidden layers to extract and learn hierarchical representations of data. Deep learning has become very popular recently because it can solve tough problems and achieve top performance in different areas. Here’s an in-depth explanation of how deep learning works.

- Architecture

Deep learning models consist of multiple layers of interconnected artificial neurons, where each layer processes and transforms the input data. The first layer is the input layer which receives the raw data. The subsequent layers are hidden layers, and the final layer is the output layer, which produces the predictions or outputs. - Deep Architectures

Deep learning models are characterized by deep architectures meaning they have multiple hidden layers between the input and output layers. These hidden layers enable the network to learn increasingly abstract and complex representations of the data as information flows through the network. - Hierarchical Feature Learning

Each hidden layer in a deep learning model learns and extracts features from the previous layer’s output. The lower layers capture low-level features like edges or textures while higher layers learn more abstract and high-level features. This hierarchical feature learning allows deep learning models to automatically learn and represent complex patterns and structures in the data. - Non-Linear Activation Functions

Activation functions introduce non-linearity into the deep learning models that can allow them to model complex relationships in the data. Popular activation functions include the sigmoid, ReLU (Rectified Linear Unit), and tanh (hyperbolic tangent) functions. These activation functions determine the output of each neuron based on its inputs. - Weight Initialization

The weights in a deep learning model are initialized randomly before training. Proper initialization is crucial to ensure that the network starts learning effectively. Techniques like Xavier or He initialization help to alleviate the vanishing or exploding gradient problem, which can occur during training. - Training

Deep learning models are trained using large labeled datasets and the backpropagation algorithm. During training, input data is fed forward through the network, and the output predictions are compared to the ground truth labels using a loss function, such as cross-entropy or mean squared error. The gradients of the loss concerning the model’s weights are calculated using backpropagation, and optimization algorithms like stochastic gradient descent (SGD) or its variants are used to update the weights iteratively. - Regularization Techniques

Deep learning models are prone to overfitting where they perform well on the training data but generalize poorly to new or unseen data. To mitigate overfitting, various regularization techniques are employed, such as dropout, which randomly deactivates a fraction of the neurons during training, and L1 or L2 regularization, which add penalties to the loss function to encourage weight sparsity. - Evaluation and Inference

Once the deep learning model is trained that it can be evaluated using separate validation and test datasets to assess its performance. Inference or prediction involves passing new, unseen data through the trained model to obtain predictions or outputs.

Advantages of Deep Learning

- Automatic Feature Learning

Deep learning models can automatically learn and extract features from raw data, eliminating the need for manual feature engineering. - Handling Large and Complex Datasets

Deep learning models are very useful for tasks like recognizing images that understand natural language and recognizing speech. They are good at handling big and complicated datasets. - State-of-the-Art Performance

Deep learning has done well in different areas and is better than traditional machine learning methods in many tasks.

Limitations of Deep Learning

- Data Requirements

Deep learning models require large amounts of labeled training data to achieve optimal performance. Obtaining such datasets can be challenging and costly, especially in certain domains. - Computational Resources

Training deep learning models can be computationally intensive and may require powerful hardware like Graphics Processing Units that achieve reasonable training times. - Interpretability

Deep learning models are often referred to as “black boxes” due to their complex architectures and numerous parameters, making it challenging to interpret and understand how they arrive at their predictions.

Deep Learning vs. Neural Networks

Deep learning is a subset of neural networks that refers specifically to models with multiple hidden layers. Traditional neural networks can also have multiple layers and deep learning models are characterized by their own deep architectures and the ability to learn hierarchical representations of data. Deep learning has shown superior performance in tasks that require learning complex patterns and extracting high-level features from raw data. Deep learning models are computationally expensive to train and also need lots of labeled data. In contrast, traditional neural networks are simpler and used for tasks where deep learning is not needed or possible.

Conclusion

Deep learning has architectures and hierarchical feature learning that has revolutionized the field of machine learning. Deep learning has been successful in different areas like computer vision, natural language processing, and speech recognition. Automatically learning representations from raw data and deep learning models have to tackle complex problems and make accurate predictions. They come with their challenges like the need for large labeled datasets and significant computational resources. As researchers and practitioners continue to advance the field of deep learning holds to solving complex tasks and driving innovation across different industries. Partner with Codiste to harness the power of deep learning and drive innovation in your industry. Contact us today to learn more about our Machine Learning Development services and how we can help you achieve your business goals.