Model Context Protocol (MCP) transforms enterprise AI by solving the "stateless agent" problem where AI agents lose context between sessions, causing expensive re-computation, failed multi-step workflows, and scaling limitations.

Key Benefits:

Technical Architecture:

Implementation Approach:

MCP transforms unreliable, stateless AI agents into persistent, context-aware systems with intelligent orchestration that can handle enterprise-scale reasoning tasks while maintaining security and compliance standards. Organizations implementing MCP gain competitive advantages through more reliable AI operations and reduced operational overhead.

There are too many disjointed solutions in the enterprise AI space. Product teams are having trouble implementing dependable multi-step reasoning systems at scale, ML engineers are patching memory systems with fragile workarounds, and CTOs are battling AI agents that forget past interactions.

Model Context Protocol (MCP) emerges as the architectural solution that transforms these challenges into competitive advantages. This standardized framework enables AI agents to maintain persistent memory in LLMs, execute complex reasoning chains, and operate securely in production environments, without the technical debt of custom implementations.

One fundamental shortcoming of contemporary AI agents is that they function independently, approaching every contact as if it were a new one. Significant operational difficulties are brought about by this stateless strategy, which has an immediate effect on economic results.

When implementing AI solutions, enterprise organisations face five crucial pain points:

The root cause isn't the AI models themselves—it's the absence of a standardized AI model context protocol. MCP addresses this architectural gap by providing a unified framework for persistent, secure context handling.

The MCP protocol operates as a client-server architecture that standardizes how Model Context Protocol works for AI agents to access, store, and manipulate contextual information. The protocol establishes a secure connection between AI models and external context sources via well-defined interfaces.

The core model context architecture consists of three primary components:

While guaranteeing enterprise-level security, scalability, and maintainability, this division of responsibilities allows AI agents to retain deep contextual awareness.

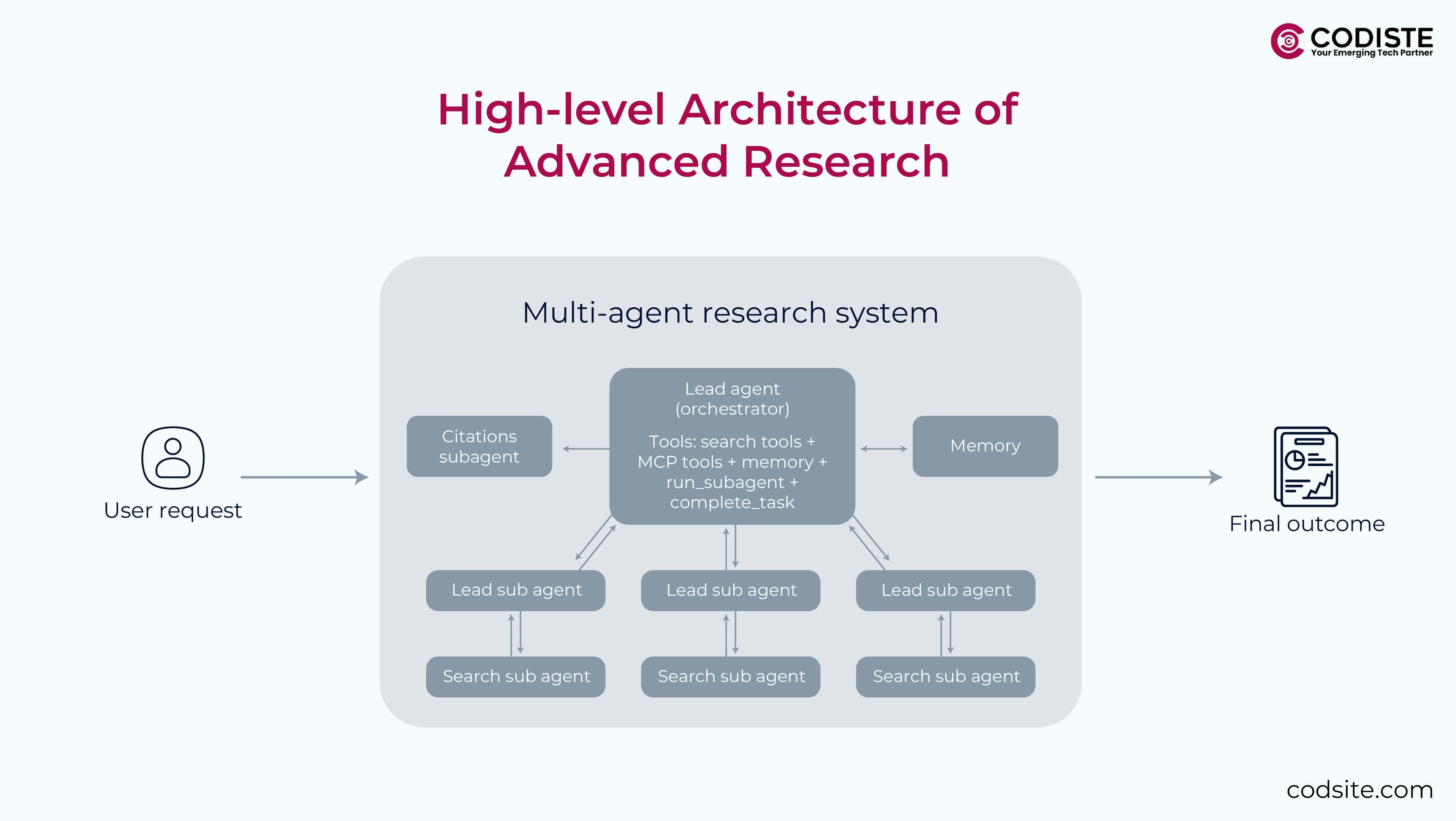

Orchestration Architecture: Managing Complex Multi-Agent Systems Modern enterprise AI deployments require sophisticated orchestration to handle complex queries and multi-step reasoning processes effectively. The MCP protocol supports a hierarchical orchestration architecture that ensures optimal query routing and agent coordination.

Master Orchestration Layer: The orchestration layer serves as the central nervous system of your AI architecture, making intelligent routing decisions based on query complexity, context requirements, and available specialized agents. This layer analyzes incoming requests and determines the most efficient processing path.

Specialized Agent Architecture: Under the master orchestration layer, multiple specialized agents handle domain-specific tasks while maintaining shared context through the MCP protocol. Each agent is optimized for particular use cases:

Sub-Orchestration for High-Complexity Scenarios: When dealing with exceptionally complex queries that require multi-domain expertise or intricate workflow coordination, the architecture supports sub-orchestration layers. These secondary orchestrators manage specialized agent clusters and coordinate with the master orchestrator.

Javascript

// Sub-orchestration example for complex financial analysis

const financialSubOrchestrator = new MCPSubOrchestrator({

parent: masterOrchestrator,

domain: 'financial_analysis',

agents: {

risk_assessment: { model: 'claude-4-sonnet', context_depth: 'deep' },

market_analysis: { model: 'claude-4-opus', context_depth: 'comprehensive' },

compliance_check: { model: 'claude-4-sonnet', context_depth: 'regulatory' }

},

escalation_rules: {

confidence_threshold: 0.85,

max_processing_time: '5_minutes',

human_review_triggers: ['high_risk', 'regulatory_uncertainty']

}

});

This hierarchical approach enables:

Implementing the MCP protocol in production environments requires careful consideration of LLM architecture patterns, security requirements, and operational constraints. To meet various organizational requirements, the protocol enables a variety of deployment models.

The foundation of any Model Context Protocol deployment is the context server layer. These servers function as specialized microservices that use standardized APIs to make contextual data available.

// Example MCP server configuration

const contextServer = new MCPServer({

transport: 'stdio', // or 'websocket' for distributed deployments

capabilities: {

resources: true,

tools: true,

prompts: true

},

security: {

authentication: 'oauth2',

encryption: 'tls1.3',

rateLimit: '1000/hour'

}

});

Context servers can be configured to handle various data sources simultaneously:

The MCP protocol enables sophisticated multi-step reasoning by maintaining context management in language models across agent interactions. This capability is crucial for complex enterprise workflows that require sequential decision-making.

The protocol supports several reasoning patterns:

Persistent memory in LLMs ensures that intermediate findings are available throughout the reasoning chain, removing the need for costly re-computation or token-intensive context insertion.

Production AI systems need strong security measures that keep private information safe while also allowing for contextual intelligence. The MCP protocol has security measures that are meant for businesses and are made for situations that are regulated.

Model Context Protocol implements multi-layered security controls that integrate with existing enterprise identity systems:

Built-in privacy protections in the protocol guarantee adherence to data protection laws:

The MCP protocol supports various deployment architectures that balance security with operational efficiency:

Enterprise AI systems must handle significant load while maintaining low latency and high availability. Model Context Protocol provides several AI inference optimization techniques that improve performance at scale.

Intelligent caching strategies reduce computational overhead and improve response times:

The MCP protocol scales horizontally through distributed deployment patterns:

Read more:

Building Accurate Context-Aware AI Agents with Model Context Protocol

Model Context Protocol Security: How We Transformed Neobank's AI Infrastructure

How to Tell If Your Blockchain’s Ready for the Multi-Chain Era

The strength of the MCP protocol is its smooth integration with the enterprise infrastructure that is already in place. Standardized connections for popular enterprise systems are provided by the protocol.

Direct access to business databases makes it possible to get context in real time:

Model Context Protocol connects with existing enterprise APIs through standardized interfaces:

Secure access to enterprise document repositories:

For production AI systems to operate dependably, extensive monitoring is necessary. The observability characteristics of the Model Context Protocol are integrated with the monitoring infrastructure that is currently in place.

Real-time visibility into context server performance:

Continuous security assessment and threat detection:

MCP protocol implementations must show that they are useful for business while keeping costs down. The protocol has a number of ways to save money.

Reduced reliance on expensive token limit in language models through context injection:

Streamlined operations reduce management overhead:

Successful Model Context Protocol deployment requires careful planning and phased implementation. Organizations should follow a structured approach that minimizes risk while maximizing value.

Start with a limited scope to validate the approach:

Expand to additional use cases and data sources:

Full-scale production deployment with comprehensive features:

The way enterprise AI systems manage context management in language models and reasoning has changed dramatically as a result of the MCP protocol. Early adoption of this protocol will give businesses a major competitive edge through more powerful, dependable, and affordable AI solutions.

The security model is strong, the scaling solutions have been tried and tested, and the technical foundation is established. The question is not whether Model Context Protocol should be implemented, but rather how it performs in your particular use case and how fast you can start the transition.

To plan your MCP implementation strategy, set up a technical meeting with our AI architecture team. Within ninety days, we will evaluate your existing systems, pinpoint integration points, and develop a personalized roadmap that yields quantifiable outcomes.

Every great partnership begins with a conversation. Whether you’re exploring possibilities or ready to scale, our team of specialists will help you navigate the journey.