DALL-E 2 and GPT-3.5 CPT represent the cutting edge of AI technology, pushing the boundaries of image generation and conversational AI. DALL-E 2’s ability to generate high-quality images from textual descriptions and GPT-3.5 CPT’s advanced conversational capabilities create a powerful synergy that opens up new possibilities for creativity, understanding, and interaction. In this blog, we will delve into the technical details of DALL-E 2 and GPT-3.5 CPT, exploring their individual strengths and how they can work together to revolutionize the field of AI. We will also discuss the ethical considerations and future implications of these advanced AI systems.

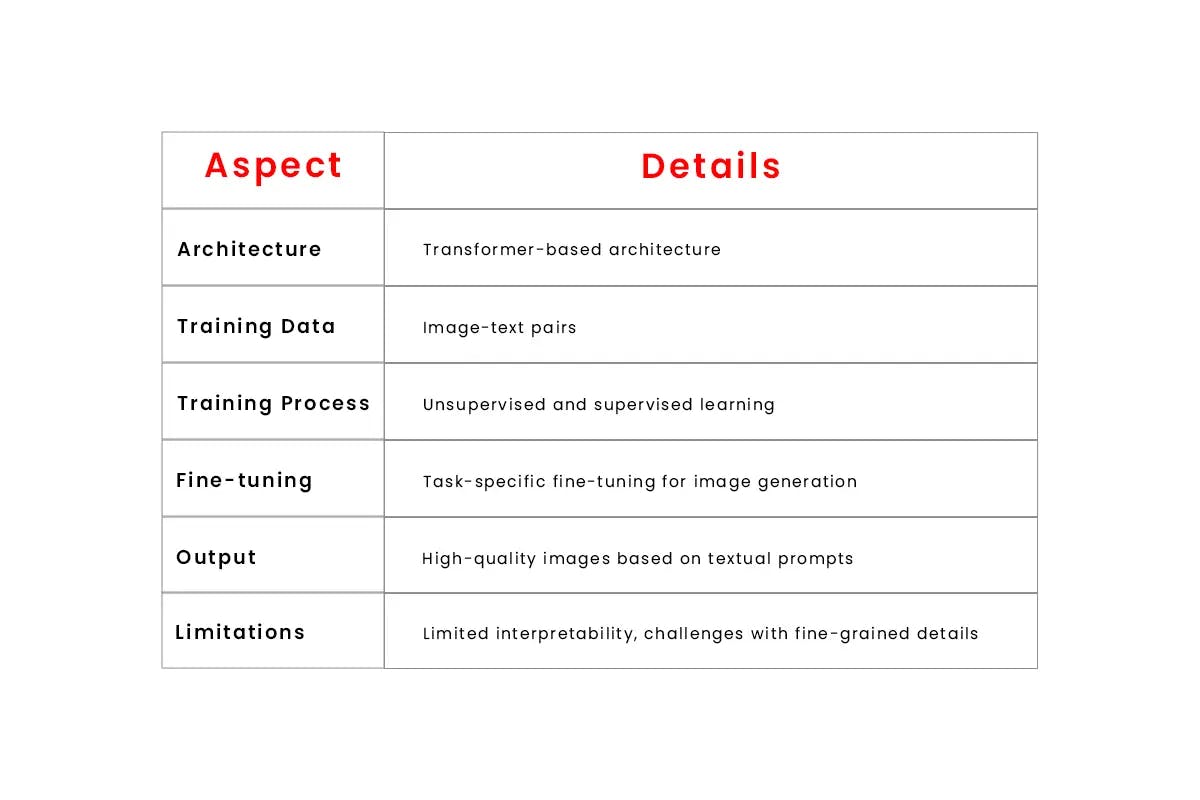

DALL-E 2 represents a remarkable achievement in image generation using transformer-based models. It builds upon the success of the original DALL-E model, pushing the boundaries of what is possible in terms of generating high-quality images from textual descriptions. Let’s delve deeper into the technical aspects of DALL-E 2.

At the core of DALL-E 2 lies the transformer architecture, which has proven to be highly effective in various NLP tasks. Transformers excel at capturing long-range dependencies and contextual relationships in data, making them suitable for modeling complex image-text interactions.

Traditionally, transformers have been primarily used for processing sequential data, such as language. However, DALL-E 2 extends their application to the domain of images by treating them as two-dimensional grids of pixels. By dividing the image into patches and flattening them into a sequence, transformers can process images in a manner similar to text.

DALL-E 2’s remarkable text-to-image synthesis capability allows it to generate images based on textual prompts. Given a textual description, the model learns to predict the corresponding image, effectively bridging the gap between language and visual representations.

During training, DALL-E 2 utilizes a massive dataset of image-text pairs. The model is trained to reconstruct the original image from a textual prompt and is optimized using techniques like maximum likelihood estimation. This training process enables the model to learn the intricate relationships between textual descriptions and visual content, thereby enabling it to generate realistic images from textual input.

One of the notable aspects of DALL-E 2 is its ability to generate highly diverse images based on a given prompt. It can produce a wide range of outputs, exploring different variations, styles, and interpretations of the textual input. This diversity stems from the stochastic nature of the generative process and the inherent capacity of the model to explore the vast image space.

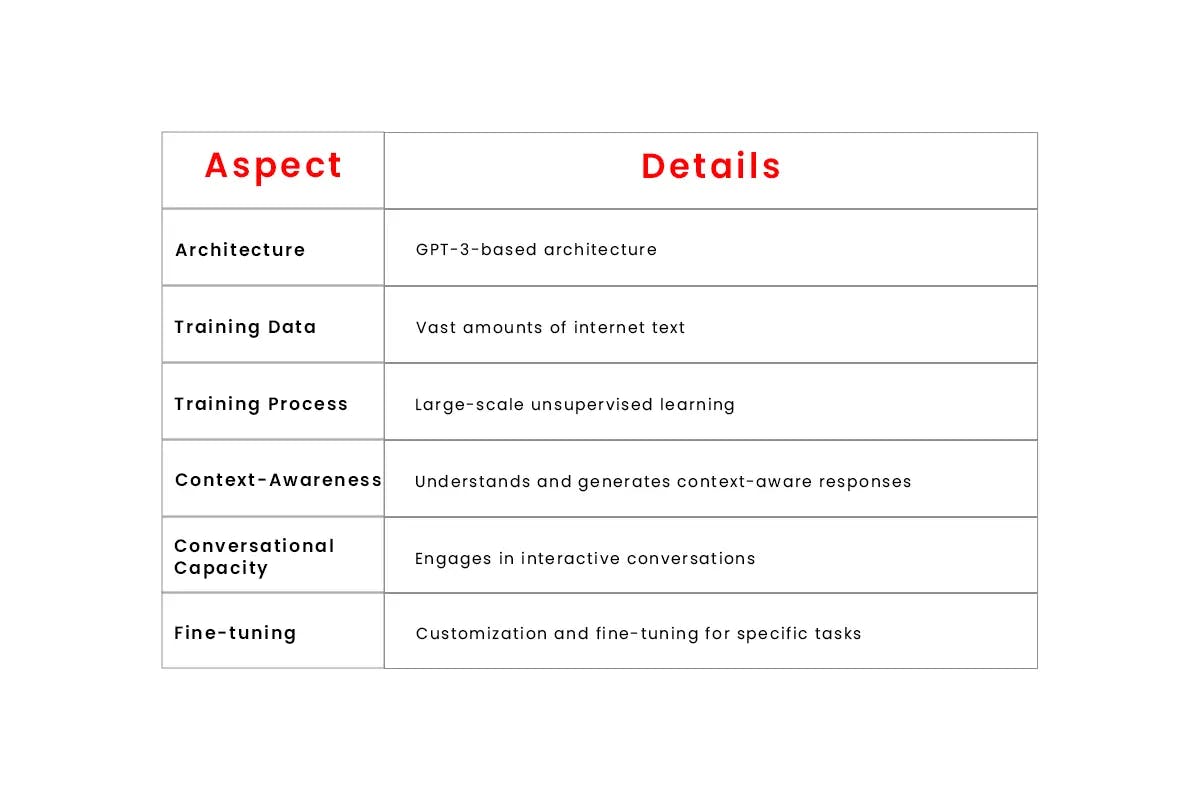

GPT-3.5 CPT represents a significant milestone in conversational AI and language modeling. Let’s explore the technical details of this powerful model

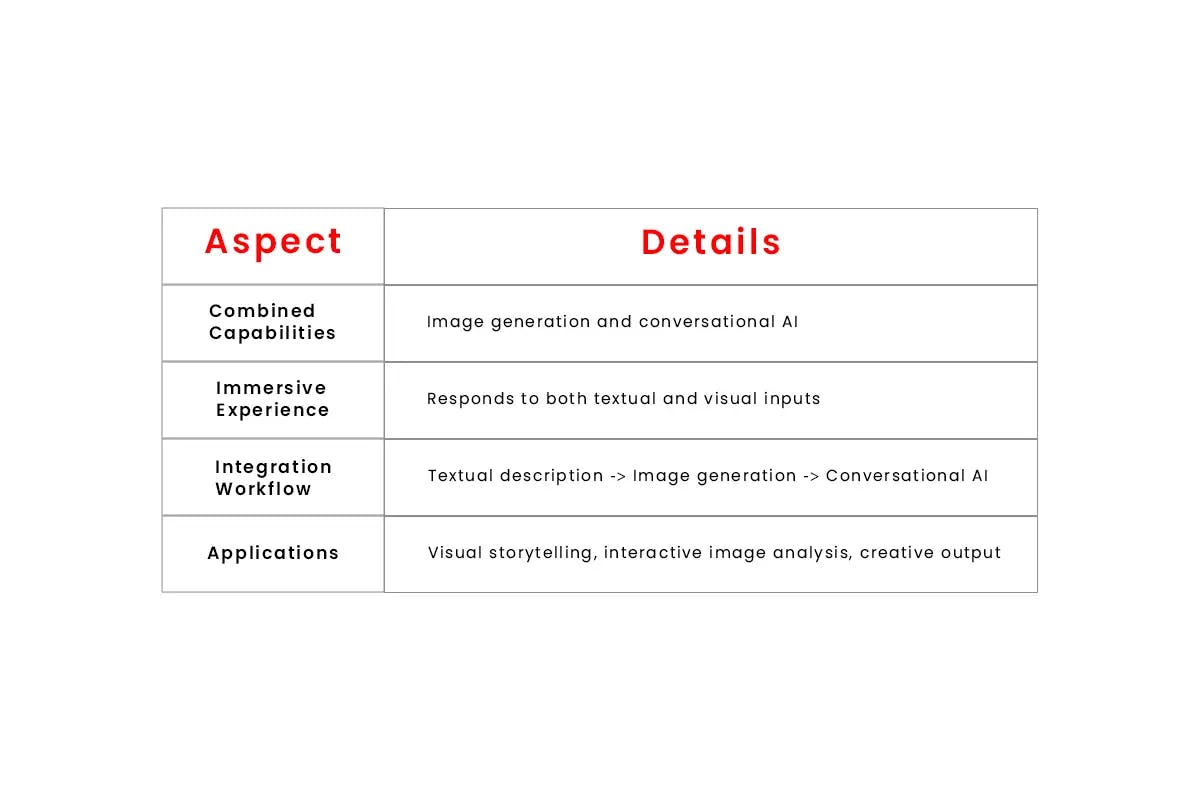

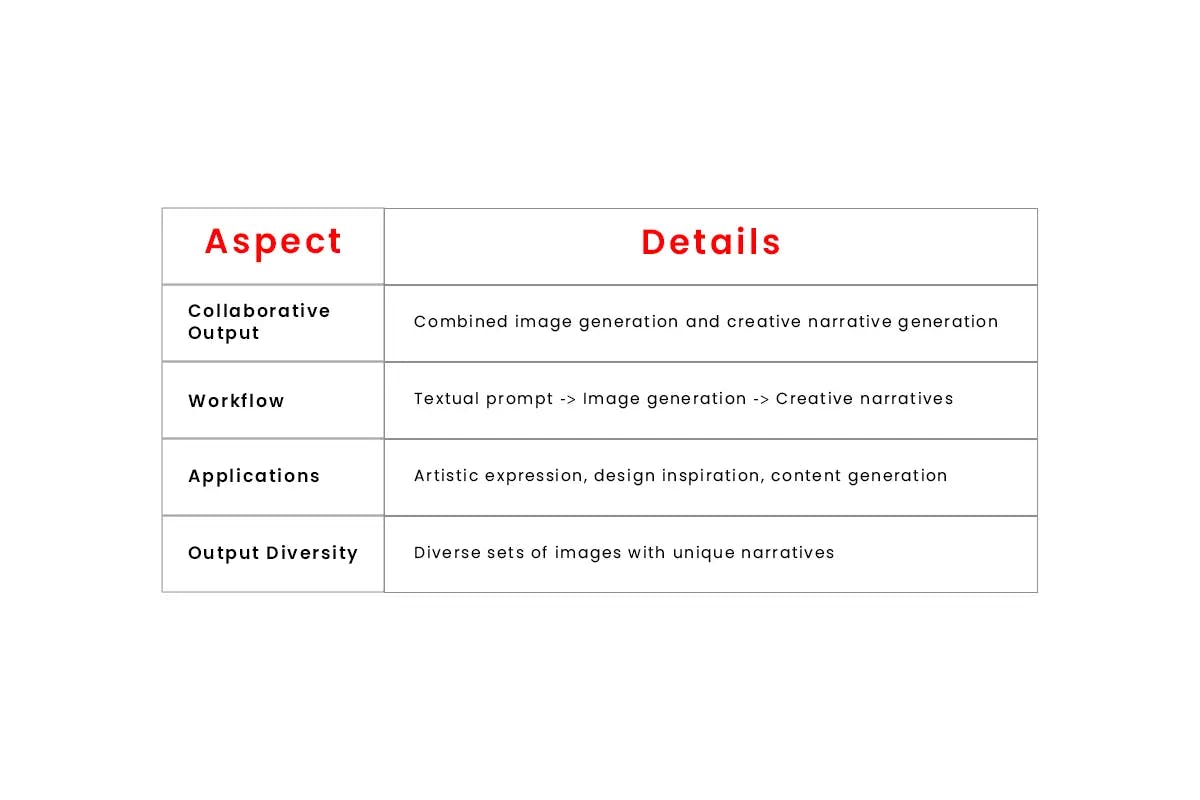

The combination of DALL-E 2 and GPT-3.5 CPT presents an exciting opportunity for synergy. Let’s explore how these models can complement each other.

As we explore the capabilities of DALL-E 2 and GPT-3.5 CPT, it is crucial to address ethical considerations and anticipate their impact on the future of AI.

The convergence of DALL-E 2 and GPT-3.5 CPT represents a significant leap in AI capabilities. Developed by Codiste, a leading machine learning company, these models combine image generation and conversational AI to revolutionize creativity, understanding, and interaction. With responsible AI development at the forefront, Codiste ensures ethical use and aims to shape the future of AI. The impact on industries like art, entertainment, and e-commerce is expected to be profound. This synergy between AI and human intelligence promises remarkable outcomes, where AI becomes a powerful tool for innovation and advancement.

Every great partnership begins with a conversation. Whether you’re exploring possibilities or ready to scale, our team of specialists will help you navigate the journey.