MCP Server vs. API Architecture Decision Guide:

Choose MCP Servers for:

Choose Traditional APIs for:

Key Decision Factors:

Hybrid Approach: MCP should be used for AI-intensive processes, while APIs should be used for routine business logic.

Technology leaders now have to make crucial decisions about integrating AI agents into company infrastructure. The MCP vs API decision between Model Context Protocol (MCP) servers and conventional API designs can have a big influence on performance, security, and long-term scalability as enterprises scale their AI endeavors.

Maintaining operational excellence while coordinating your AI infrastructure with business goals is the goal of this architectural choice, which goes beyond technology.

The Model Context Protocol (MCP) is a standardized approach to AI agent communication and context management. Unlike standard request-response patterns, MCP servers keep persistent connections and contextual state between encounters.

The protocol was developed expressly for AI workloads, addressing common issues with agent orchestration and data flow management. MCP servers for AI excel in settings demanding constant context retention and sophisticated multi-turn interactions.

Key characteristics of MCP architecture include:

For more than 20 years, REST APIs have been the most popular way for businesses to connect their systems. Because they don't keep track of state, have well-known patterns, and a lot of tools available, they are the most common choice for system and API integration.

Traditional APIs work on a request-response architecture, which means that each transaction is separate. This stateless design has proven useful in business settings since it is predictable and can grow with the needs of the business.

Core advantages of traditional API architecture:

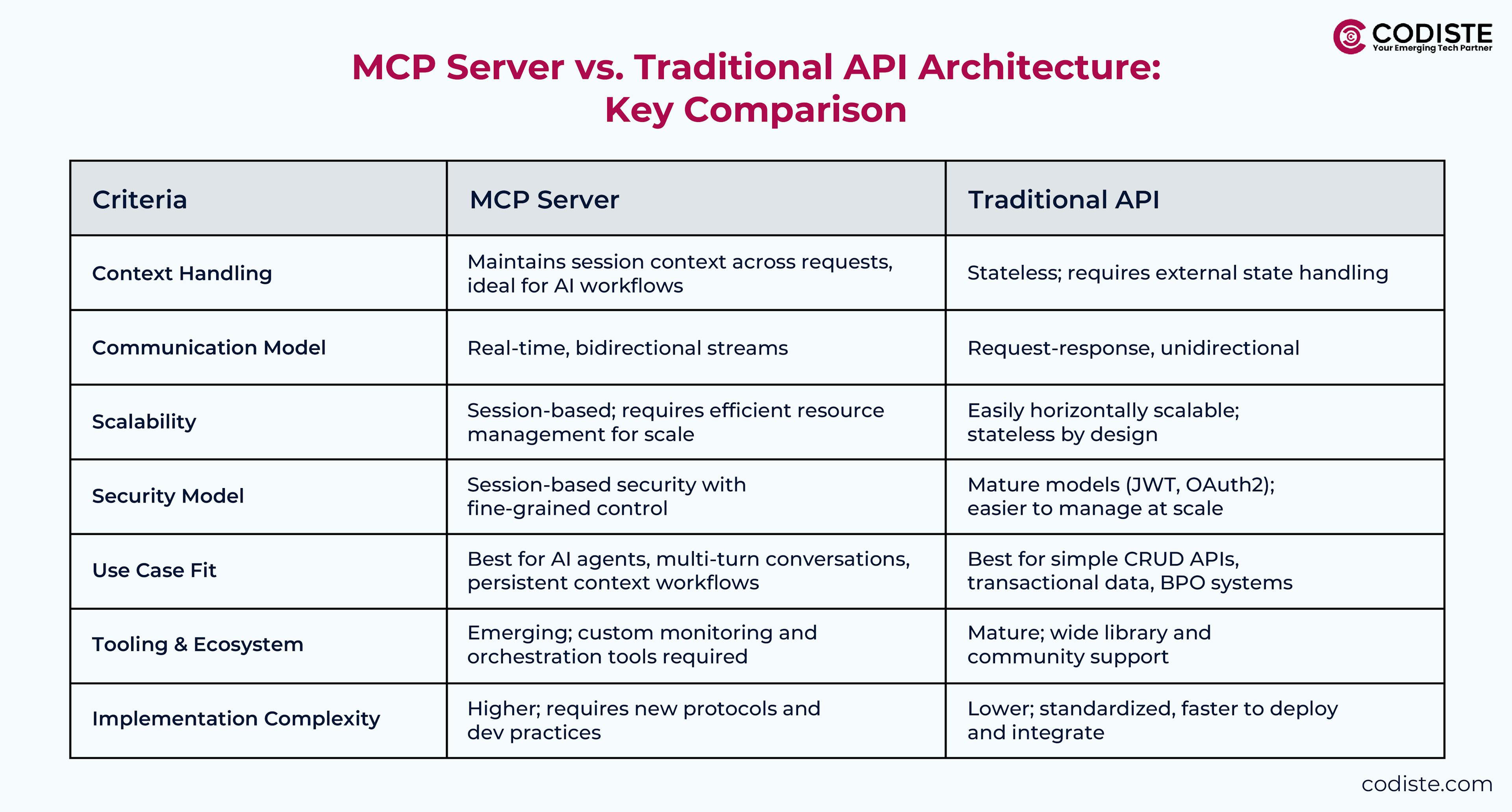

The MCP server vs API comparison's scalability needs are very different, mostly because of its different architectural foundations.

The capacity of individual instances to support concurrent users may be limited by the MCP servers' maintenance of persistent connections. They lower the computational overhead of context reconstruction, nevertheless, and perform exceptionally well in situations requiring intricate state management.

Memory use increases with the number of active sessions rather than the volume of requests because MCP connections are persistent. Comparing this to stateless systems, various scaling patterns are produced.

Traditional APIs usually offer superior scalability features for basic, high-volume interactions. MCP servers, on the other hand, can be more resource-efficient for intricate AI procedures that need persistent context.

The best architecture option in the API vs MCP debate frequently depends on the degree of control needed over AI agent interactions.

MCP servers use persistent session management to give agents fine-grained control over their behaviour. This makes it possible for complicated orchestration scenarios in which several agents work together to complete challenging tasks.

For intricate workflows, traditional APIs depend on external orchestration tools. For API integration scenarios, this offers freedom in selecting best-of-breed orchestration solutions, despite adding architectural complexity.

Different strategies are often needed for complicated orchestration in traditional API vs MCP Server setups.

AI systems need more than just regular application security. They also need to safeguard models, keep data private, and follow AI governance frameworks.

MCP servers protect data, authenticate users, and permit them to access it with built-in methods at the protocol level. The permanent connection approach makes security measures more advanced, but it also makes the attack surface bigger.

Because MCP connections are stateful, session management must be done carefully to keep unauthorized users out and make sure that sensitive data is properly cleaned up.

Traditional APIs often offer better-established security protocols and audit trails for businesses with stringent compliance needs.

In the MCP vs. traditional API integration scenario, the complexity of installing and sustaining each architecture varies greatly depending on organizational skills and existing infrastructure.

Specialized protocol knowledge and careful consideration of connection management, state persistence, and error handling patterns are necessary for the implementation of MCP servers.

In order to manage stateful apps at scale and keep an eye on persistent connections, organizations must create new operational procedures.

The choice of architecture is crucial for fulfilling SLAs and user expectations in any MCP vs. API comparison because performance requirements differ significantly depending on the use case.

When situations call for complicated state management and frequent context access, MCP servers perform exceptionally well. Rebuilding context and creating connections for every contact is a burden that the permanent connection approach removes.

For stateless activities, traditional APIs operate best and gain from established caching schemes, content delivery networks, and load balancing methods.

For conventional APIs, response caching, database query optimisation, and CDN use offer notable speed gains. These methods are supported by established tooling ecosystems and have extensive documentation.

Analysing the differences between MCP and API necessitates weighing a number of variables against your unique organisational context and technical needs in order to choose the best architecture.

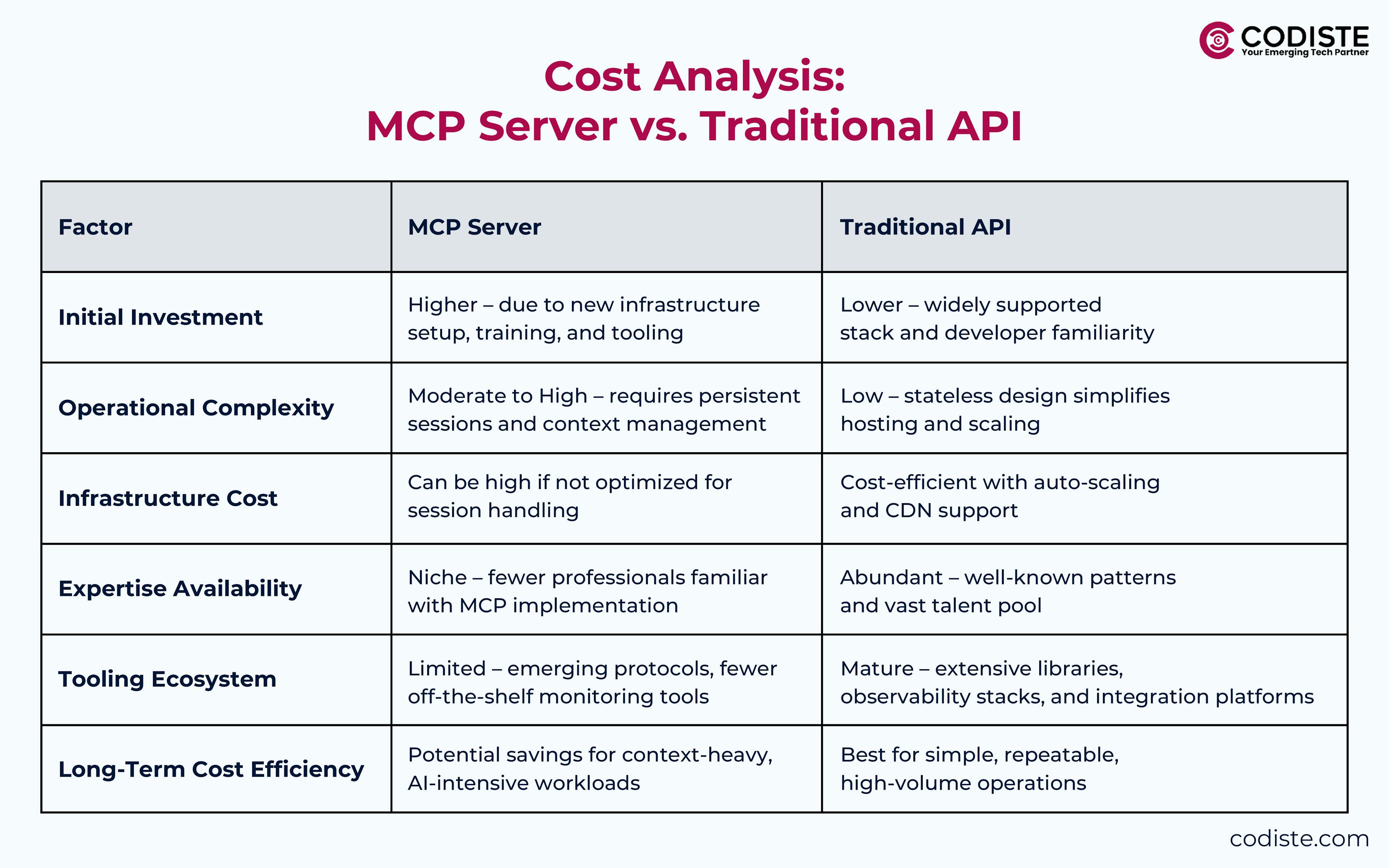

Understanding the total cost of ownership for each architecture aids in long-term strategic decisions throughout the MCP vs API review process.

Infrastructure expenses may rise due to persistent connection requirements and memory utilization for state management. However, in certain applications, lower computing complexity can balance these costs.

Traditional API Cost Benefits

Many businesses benefit from hybrid architectures that use both MCP servers and standard APIs, depending on the needs of each use case.

With a phased migration approach, companies may test out MCP servers for some AI operations while keeping their current API integration infrastructure for use cases that have already been established.

Putting MCP servers in the right places for AI-heavy workflows while keeping traditional APIs for ordinary business logic is a balanced way to get the most out of each architecture in MCP integration situations.

Think about using API gateways that can send requests to the right backend services based on the request's needs and characteristics.

Architectures that can adapt to changing requirements and evolving standards are required as AI and infrastructure technology evolves.

MCP is a novel technique created exclusively for AI workloads, which may offer greater alignment with future AI infrastructure improvements. However, because it is relatively young, it poses a risk in terms of long-term support and ecosystem growth.

Traditional APIs are stable and predictable, but as AI requirements get more advanced, additional adaptation layers may be required.

Evaluation Criteria for Future-Proofing:

MCP servers vs APIs? It's a strategic decision that will affect your organization's AI capabilities for years.

Organisations with established infrastructure and standard API integration requirements benefit from traditional APIs' proven scalability, mature tooling, and extensive expertise. Their stateless design predicts scaling and has well-known security patterns.

AI-intensive applications requiring persistent context and complex state management benefit from MCP servers. MCP's native context awareness and bidirectional communication may help teams building advanced AI agents and workflows.

Codiste specializes in helping enterprises navigate complex AI architecture decisions. Our team of AI and infrastructure experts provides comprehensive assessment services, proof-of-concept implementations, and migration planning to ensure your architecture choice aligns with both current needs and future growth objectives.

Whether you choose MCP servers, traditional APIs, or a hybrid approach, Codiste delivers the expertise and implementation support needed to transform your AI infrastructure vision into reality. Contact our solutions architects to begin your AI architecture evaluation and implementation journey.

Every great partnership begins with a conversation. Whether you’re exploring possibilities or ready to scale, our team of specialists will help you navigate the journey.